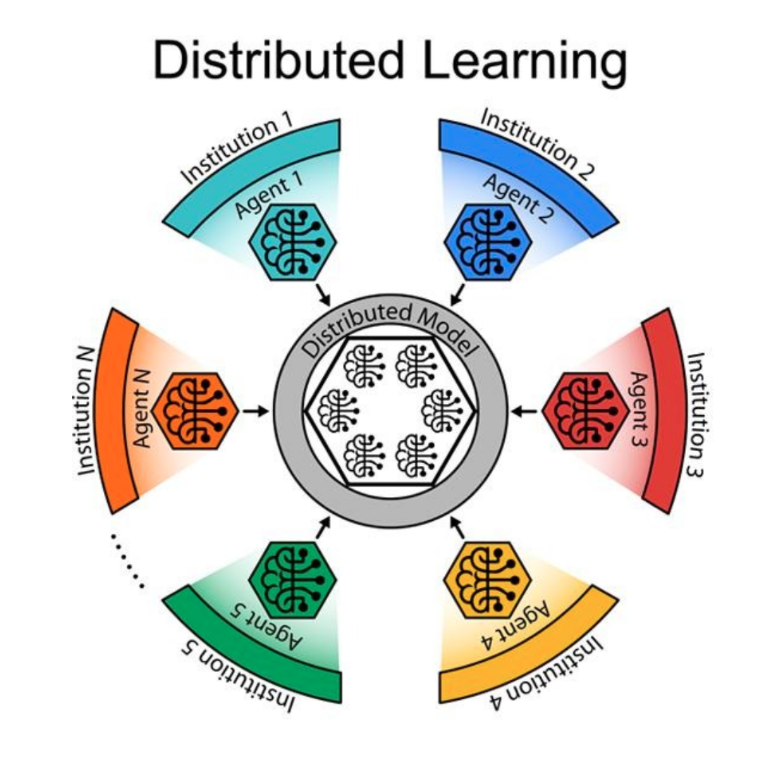

Distributed learning

Deep learning models require a large amount of training data to achieve high accuracies. The standard approach for training these models uses a large centralized database of collected data. However, in healthcare, concerns about data sharing and privacy make creating centralized databases difficult. Although large amounts of patient data are collected daily, this data is distributed across many local healthcare centres and not shared.

In distributed learning, model training occurs locally at each data stakeholder (e.g. healthcare centre). The locally trained models do not contain individualized data but only consist of abstracted mathematical parameters (i.e. neural network weights). After local training, the models are sent to a central server that combines the local models derived from individual data stakeholders into a single global model. This global model can leverage the knowledge derived from a larger amount and variety of data to achieve accuracies greater than any locally trained model. The main benefit of distributed learning is that it will increase the amount of data available for training deep learning models, which will increase their accuracies, while ensuring that the personal data never leaves the local data stakeholder, which retains control over how the data is used. Additionally, distributed learning will enable models to be trained on a greater variety of data, which will reduce biases in the models that may arise from training on a limited local dataset.

Publications

Tuladhar A, Gill S, Ismail Z, Forkert ND: Building machine learning models without sharing patient data: A simulation-based analysis of distributed learning by ensembling. Journal of Biomedical Informatics, 106:103424, 2020

Tuladhar A, Gill S, Ismail Z, Forkert ND: Distributed learning in medicine: training machine learning models without sharing patient data. Machine Learning for Health Workshop at NeurIPS, Vancouver, Canada, 2019

Team members

-

Anup Tuladhar

-

Raissa Souza